Our setup includes two Velodyne VLP-16 LIDAR's, a mid-range IMU and wheel encoder odometry mounted to a mobile robot. This sensor data is collected as the robot moves around its environment, and is fed through the Cartographer algorithm (online). Cartographer is not meant to be an online algorithm, but can be tuned for low latency and for our purposes that has worked fine. After a sufficient amount of mapping is performed, the sensor data is fed through the "Assets Writer", which aligns poses from the pose graph with the sensor data, hence building both a 2D map and 3D point cloud of the space. These maps have been found to be accurate to about 2-5 cm of error.

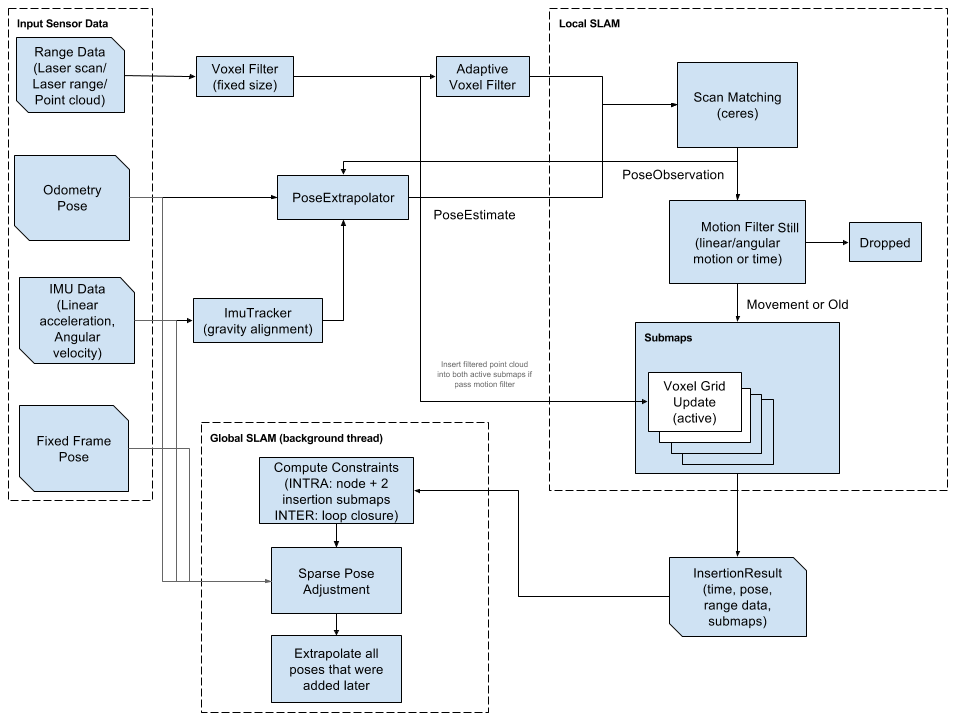

The Cartographer algorithm works in two parts. The first is known as Local SLAM and consists of:

- A pose estimate created by scan matching the incoming laser range data

- The insertion of that laser range data into a "submap"

- Creating constraints between parts of individual submaps (inter-submap). These are simply differences in poses after a significant movement.

- Creating constraints between different submaps (intra-submap). These constraints are the difference between poses that exist on multiple submaps or are close-enough. They help to connect the different submaps together.

- Performs Global Optimization by way of "sparse pose adjustment". This algorithm uses the computed constraints and tries to move each submap around so that it can meet the created constraints with minimal error.

- Finally, it extrapolates all of the poses onto the newly created map with minimal error.

This is an image of the algorithm from the Cartographer website.

After mapping, we output a point cloud that looks like this, showing the area that we mapped:

As I have become more familiar with the Cartographer package, I have learned about some tricks and pitfalls that have come up.

- The Cartographer algorithm has shown to be very sensitive to IMU location. Even the slightest discrepancy between the true and modeled locations can cause major warping of the submaps, especially when using Cartographer 3D, which we do.

- Tuning of Cartographer is challenging because there are many knobs to turn. Especially because the values of the tuned parameters are often dimensionless scalar values that multiply against relative errors.

- The latency can be reduced with the corresponding effect of reducing the accuracy of the maps.

- If the effect of scan matching is tuned well, the odometry can have a very small impact on the resulting map.

- The orientation of the LIDAR has a strong effect on the results of the map. In our use case, we are using a horizontal and vertical LIDAR in order to see the full space around the robot and a vertical strip that gives more of the ceiling and floor. This turned out to work better than angling the LIDAR more non-overlapping visibility.

Thanks for reading!

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.